What is Multimodal AI?

Multimodal AI is a rapidly emerging subfield of artificial intelligence that pushes the boundaries of how machines perceive and interpret the world. By focusing on processing and understanding data from multiple modalities—such as text, images, audio, and video—this technology creates a more holistic and nuanced understanding of complex information. Whether combining visual and auditory cues to improve human-machine interaction or integrating text with images to revolutionize content generation, multimodal AI allows machines to interact with the world in ways that closely mimic human perception. This opens new doors for innovations in industries like healthcare, virtual reality, and beyond, where a singular data type is insufficient for making informed decisions. As the field advances, multimodal AI is poised to transform how we develop intelligent systems, making them more adaptable, intuitive, and capable of real-world problem-solving.

Multimodal AI refers to systems that can process and understand data from different formats, or “modalities,” such as text, images, audio, and video, in a coordinated manner.

Here are some of the key concepts:

Multimodal Data

Multimodal data includes different types of information formats. A dataset might, for example, include both text and images or combine video with audio. By processing multiple types of data, AI can develop a richer understanding of complex scenarios. This allows the system to draw insights from complementary sources, which a single data modality may not fully capture.

Feature Extraction

For each data modality, relevant features need to be identified. In multimodal AI, the system extracts critical aspects like colors or shapes in images, keywords in text, or tones in audio. These features are then represented in a way that allows the AI to process and learn from them.

Fusion

Fusion is the process of combining features extracted from different modalities to form a unified understanding. For instance, in video analysis, the AI might merge visual information (such as facial expressions) with audio cues (like tone of voice) to gain a more comprehensive understanding.

Joint Learning

Instead of training separate models for each modality, joint learning involves teaching the model to process multimodal data simultaneously. This leads to improved performance and accuracy because the system learns from various inputs in a coordinated manner.

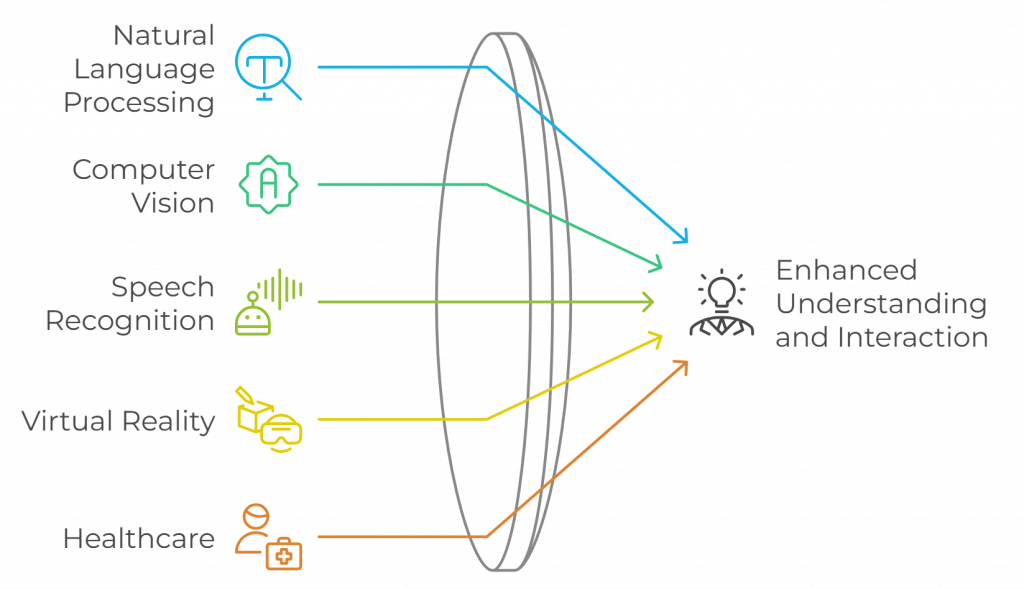

Applications of Multimodal AI

- Natural Language Processing (NLP): It enhances the understanding of text when related to images or audio, such as interpreting video subtitles.

- Computer Vision: Multimodal AI can improve image recognition by combining visual data with additional information like text or sound.

- Speech Recognition: Visual cues (like lip movements) can be combined with audio data for more accurate transcription.

- Virtual Reality (VR): By integrating visuals, sound, and even tactile feedback, multimodal AI creates immersive and responsive VR experiences.

- Healthcare: It assists in diagnosing conditions by analyzing multimodal data such as medical images, patient records, and clinical tests together, offering a holistic view for better treatment planning.

Experion’s Multimodal AI Initiatives

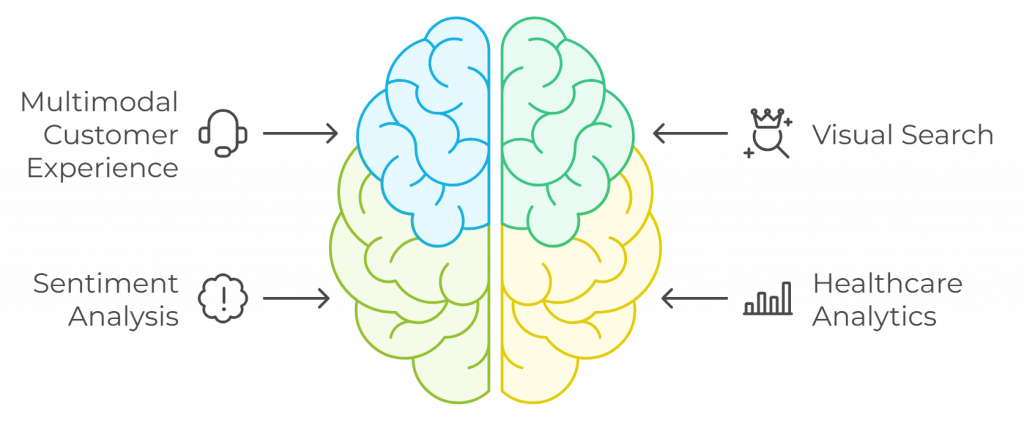

At Experion, we are at the forefront of multimodal AI research and development. Our focus is on developing innovative solutions that leverage the power of multimodal data to solve complex problems. Some of our key initiatives include:

- Multimodal Customer Experience: Analyzing customer interactions across multiple channels, such as voice, chat, and email, to provide personalized and efficient support.

- Visual Search: Enabling users to search for products or information based on images or videos.

- Sentiment Analysis: Understanding the sentiment of text, images, and audio data to gain insights into customer satisfaction.

- Healthcare Analytics: Analyzing medical images, patient records, and other data to improve disease diagnosis and treatment outcomes.

Conclusion

Multimodal AI has the potential to revolutionize many industries and drive significant innovation. As technology continues to advance, we can expect to see even more sophisticated and powerful multimodal AI systems that can solve increasingly complex problems. At Experion, we are committed to being at the forefront of this exciting field and developing cutting-edge solutions that benefit our clients and society as a whole. Partner with Experion and unleash the full potential of Multimodal AI—where we fuse data, technology, and innovation to create mind-blowing solutions that take your business to the next level. Let’s make the future unstoppable together!