Data modernization is not just about moving data from legacy systems to modern platforms; it’s about creating a robust framework that supports analytics, AI, and secure, efficient operations. In an era where data drives decision-making and customer experiences, the Banking, Financial Services, and Insurance (BFSI) sector is increasingly recognizing the need for data modernization. Despite the clear benefits, many financial institutions struggle with legacy systems and data quality issues. This blog outlines the key elements and best practices for building a modern data framework in the BFSI sector.

Key Elements in Data Modernization Roadmap

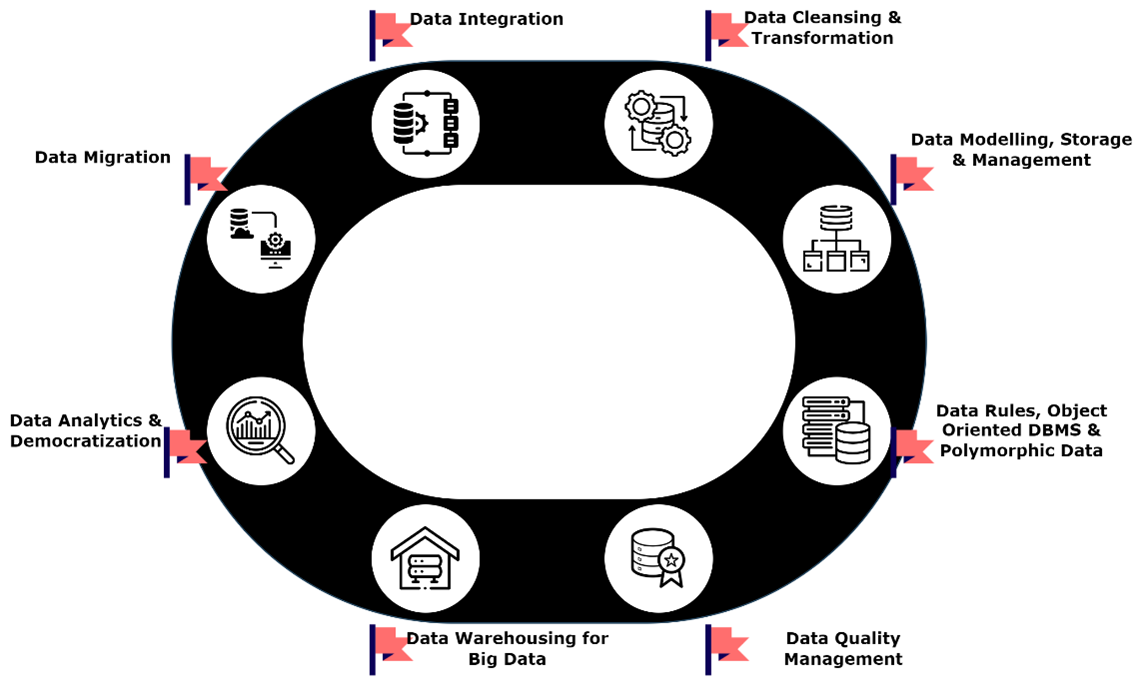

The data modernization process focuses on developing a modern data framework that includes a series of steps and techniques, with modern technologies at its core. A strong data modernization strategy includes a few critical elements that enable financial enterprises to increase ROI by creating an accessible, scalable, and compliant data ecosystem. The diagram below exhibits them all in one place. The institute can either consider these milestones and achieve them individually, or alternatively, logically group the elements and accomplish them together.

- Data Migration

The goal of data migration is to move all your data from legacy platforms to a modern data infrastructure. A solid data migration strategy ensures a smooth transition of clean data from an old to a new and modern data platform with no or little business disruption. And here’s where the cloud migration comes in.

The size of the cloud migration market is estimated at USD 232.51 billion in 2024 and is expected to grow at a CAGR of ~28% during the forecast period (2024–2029).

Globally, many finance institutes have already switched to cloud platforms or are accelerating their migration owing to benefits such as scalability, agility in integration, and adoption of emerging technologies in the BFSI ecosystems. In recent years, cloud adoption has been a significant consideration for IT cost-reduction strategies.

Data migration is a critical step in the data modernization process. There are obstacles, but they are worth crossing. Completing this step simplifies the remaining milestones.

- Data Integration

Building a unified data view through data integration further enables the use of the data for meaningful consumption in analytics and AI frameworks. In a well-structured data modernization strategy, appropriate cloud-hosted ingestion tools can be in place, allowing data integration solutions to be scalable, robust, and easily accelerated. Modern extract-transform-load (ETL) tools empower real-time integrations, ensuring the quickest data availability. This is crucial in BFSI, where up-to-date information is critical; a prime example is the live and accurate integration of financial transactions. This allows institutes to offer more cutting-edge services, thus increasing customer satisfaction.

- Data Cleansing & Transformation

Data transformation is essential for harnessing the full potential of data assets, enhancing efficiency, and driving business value. Transformation can involve various operations, including data cleansing. This cleaning process eliminates errors, inconsistencies, and missing values from a large volume of ingested data, resulting in high-quality, reliable data for analytics and AI/ML solutions. Data transformation can also include standardization, aggregation, encoding categorized data, binning, smoothing, time-series decomposition, text preprocessing, etc. The choice of transformation depends entirely on the organization’s use case, the nature of the data, or the specific goals that the analysis or modeling task aims to accomplish. Comprehensive data transformation allows everyone in the organization to better understand the data and makes it easier to work with.

- Data Modelling, Storage & Management

Banks and financial services institutions collect a lot of data from customers’ online and offline transactions, social engagements and interactions, feedback, surveys, and more. Data modeling is a discipline widely applicable to any intersection of people, data, and technology. It is a well-defined approach to creating an illustrated data model to organize its attributes, establish relationships between objects, identify constraints, and define the context to manage the data. With data integration in place and connecting to various systems, it is imperative that a data model be established before moving to data consolidation and storage. Legacy data frameworks have followed this essential step, and their modern counterparts cannot eliminate it either.

As the organization grows, the volume of data also increases. A well-laid-out data storage management plan removes the snag of having big data stashed across multiple systems, with users creating multiple copies (which is not ideal), and empowers BFS organizations to store data efficiently and securely, in compliance with laws, making the data easy to find, access, share, process, and recover if lost. An organization can choose in-house infrastructure, cloud, or a hybrid platform for their data storage. A data framework may consist of one or more data layers, each serving distinct purposes and storing data uniquely. Depending on the organization’s vision, one may choose a relational database, a data lake, a lakehouse, a data mesh storage architecture, or a combination of these.

- Data Rules, Object-Oriented DBMS, and Polymorphic Data Store

A large amount of invalid or erroneous data can disrupt end services and create unpleasant experiences for both customers and the organization. Therefore, a rule-based data validation approach, when systematically combined within the data integration framework, consistently produces superior quality data. It is often necessary to store a segment of data as an object, in addition to traditional structured data storage systems like databases. Object-oriented DBMSs combine the features of object-oriented and database management systems to store complex data. They enforce object-oriented features like encapsulation, polymorphism, and inheritance, along with database concepts like ACID properties. Banking organizations may consider having an ODBMS while developing high-volume transactional websites. It could also be useful for a risk management application because it provides a real-time view of the data.

- Data Quality Management

Failing to ensure data quality can have a ripple effect across a bank. Low data quality impacts privacy compliance, leading to more mistakes and, more often, significant failures for a business operating within one of the world’s most highly regulated industries and dealing with a vast volume of sensitive data. Privacy compliance is only the beginning. Regional banks and credit unions must also adhere to various reporting compliance regulations, including laws covering fair lending, customer data protection, enabling customers to make informed decisions, accurate disclosure, anti-money laundering, and capital adequacy. Failure to do so can lead to severe fines and reputational damage.

Beyond compliance, inadequate data quality can lead to poor decision-making. This is particularly important when it comes to managing risk. Banks need the highest quality data at their disposal to manage risk effectively, which is one of the most critical functions the organization must undertake. A comprehensive data quality management (DQM) framework should be in place on all data platforms, especially in the BFSI sector. We broadly recommend five key steps to cover all the bases of DQM:

- Assessing the quality of organizational data is the starting point. Techniques such as data profiling can be used to inspect and understand the data’s content and structure.

- Create a data quality strategy to improve and maintain the data’s quality. It is a continuous process and can be based on an enterprise-level set of rules or specific use cases. The organization can decide whether to use a feature-rich DQ tool or a custom module.

- Perform initial data cleaning, an action to improve the data. It can be as simple as identifying missing entries, completing them, or removing duplicate entries.

- Implementation of the Data Quality Framework is where the strategies come into action. It should be a seamless integration, whether with the data integration process or the business process. DQM should eventually become a self-correcting, continuous process.

- Monitor the data quality processes, ensuring DQM is not just a one-time event. Constant monitoring and maintenance, as well as timely review and updates, are the only ways to maintain high data standards.

- Data Warehousing

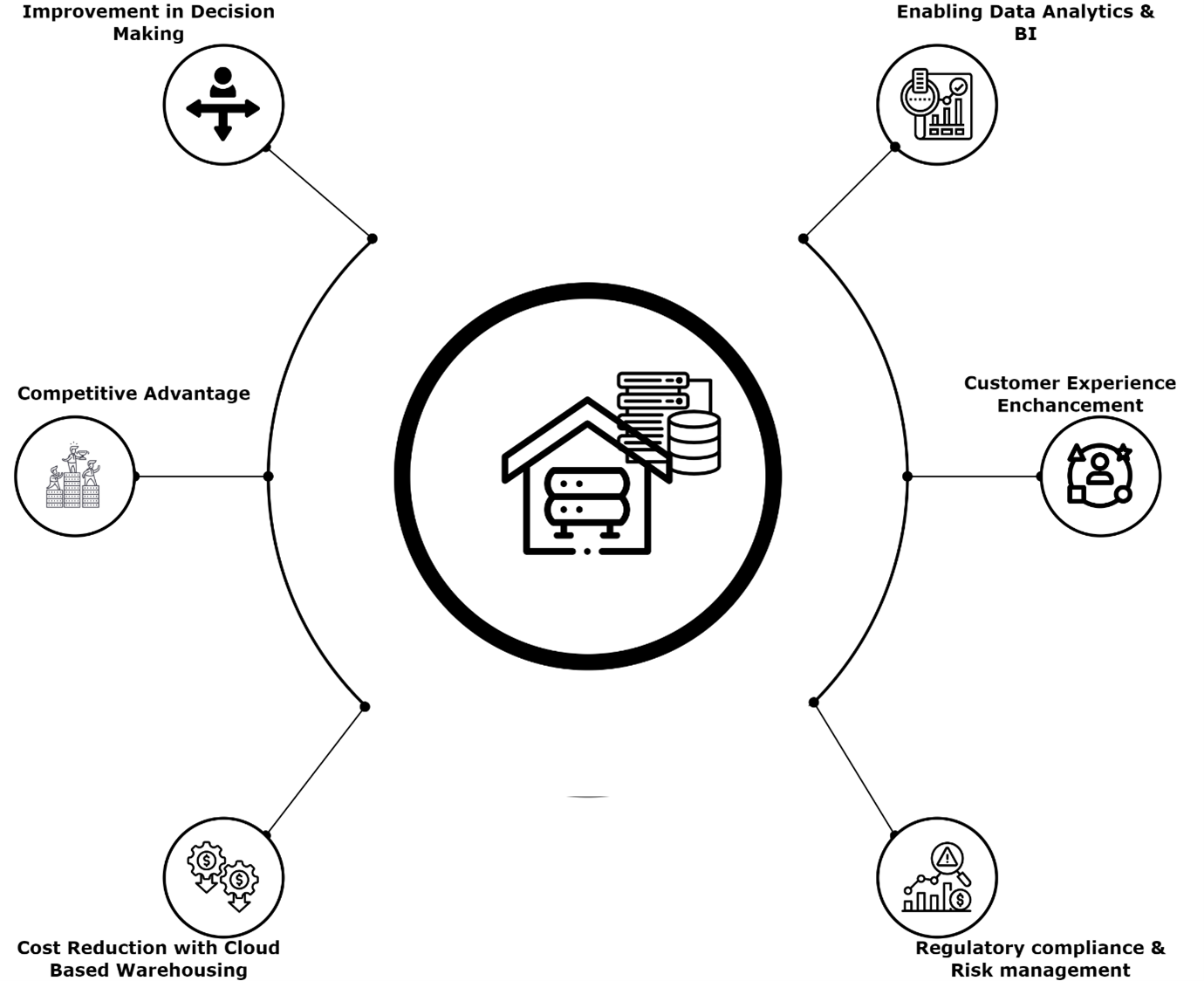

A data warehouse centralizes and consolidates large amounts of data from disparate sources. Its analytical capabilities allow organizations to derive valuable business insights from their data to improve decision-making and provide better services and experiences. The banking sector can instantly reap numerous benefits from a well-designed data warehouse, including the following key advantages:

To handle the massive amounts of data generated by banks, technologies like Hadoop and Spark have become the go-to choices for storing and processing unstructured and semi-structured data. They provide a scalable and cost-effective solution for data warehousing. More and more institutes are moving towards cloud data warehousing solutions like Snowflake, Azure Synapse Analytics, Amazon Redshift, or Google BigQuery to leverage all the benefits they offer in terms of scalability, efficiency, and accessibility. - Data Analytics & Data Democratization

The organization does not immediately benefit from having a large, consolidated volume of data in a data warehouse until it uses that data to provide insights, improve services, and enhance experiences for both customers and organizational users. One popular use case where data analytics plays a critical role is in loan disbursement. In a traditional banking platform, loan disbursement often involves document submission, verification, and due diligence from the bank side, and each of these steps contributes to delays. However, to gain a competitive advantage, neo-banks are leveraging data analytics on real-time data, allowing them to make decisions almost instantly.In essence, data analytics is crucial for banks to enhance operations, explore potential opportunities, identify target demographics for upcoming campaigns, or simply upsell their products. Here are some other key use cases where data analytics will soon become indispensable for banks:- Providing 360-degree insights on a customer.

- Understanding the operations and services through data, performing predictive analytics, and upgrading features to reduce operational costs.

- Understanding target customer demographics, categorizing them based on data insights, and providing them with a personalized experience.

- Examining risks associated with credit, claims, and fraud, and improving risk management practices.

- Understanding market trends, embracing emerging practices, and staying ahead of the curve.

Data democratization is an enablement process to make data available to everyone in an organization. If a marketing manager wants to access some of the reports created through data analytics, and if that involves IT, it delays decision-making. Democratization of data eliminates siloed or outdated practices. It encourages users to truly use the data, empowering them to identify new opportunities, create revenue streams, and drive growth. Data democratization can safely unlock access to data stored in a data warehouse, lake, or lakehouse. The modern data framework can avoid distributed data access, a challenge on legacy platforms, by connecting them through a single interface.

Conclusion

By following these key elements and best practices, financial institutions can build a modern data framework that not only supports their current needs but is also scalable and future-proof. This strategic approach ensures data is managed efficiently, securely, and used to drive meaningful business outcomes. Embracing data modernization helps BFSI organizations stay competitive, comply with regulatory requirements, and deliver superior customer experiences in an increasingly data-driven world.

Implementing a modern data framework is a journey that requires careful planning and execution. However, the benefits of such a transformation—enhanced agility, better decision-making, and improved operational efficiency—make it a worthwhile investment for any forward-thinking financial institution.